From MCP to A2A: Advancing Agent Evaluation

Table of Contents generated with DocToc

From MCP to A2A: Advancing Agent Evaluation

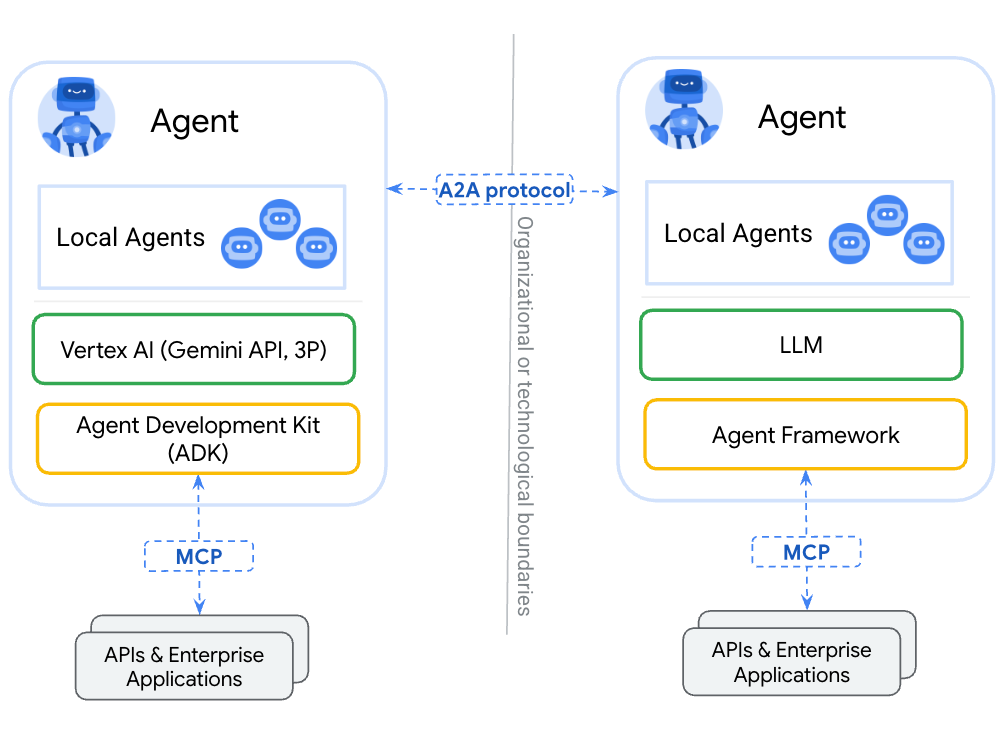

As AI agents evolve from performing isolated tasks to collaborating within multi-agent ecosystems, evaluating their performance becomes increasingly complex. In this discussion, we explore the progression from the Model Context Protocol (MCP) to Agent-to-Agent (A2A) protocols, highlighting their architectures, functionalities, and key evaluation metrics.

Why Protocols

Communication between Agent and Tools

Using the Model Context Protocol (MCP), an AI agent can reliably invoke external tools with standardized, secure, and auditable requests. MCP streamlines integration, ensuring predictable data formats and facilitating error tracing, which minimizes integration complexity and improves system robustness.

Communication between Agents

The Agent-to-Agent (A2A) protocol empowers vendor-specific agents to communicate seamlessly. A2A enables dynamic task delegation and shared context, allowing heterogeneous agents to coordinate effectively. This standardized framework enhances interoperability and ensures efficient, synchronized team-level decision-making.

MCP: Structured and Standardized Tool Invocation

The Model Context Protocol (MCP) addresses the challenge of standardizing how large language models (LLMs) interact with external data sources and applications. It employs a client–server architecture:

- Client: Responsible for converting the LLM’s requests into structured data and sending these requests to an MCP server.

- MCP Server: Acts as an intermediary by routing these standardized requests to the relevant external resource (such as APIs, tools, or applications), and then returning the structured responses.

Key benefits include:

- Standardization: Any AI agent that supports MCP can directly invoke applications or tools equipped with an MCP server, eliminating the need for custom plugin integrations.

- Simplification: MCP resolves the integration complexity of incorporating external data and applications into AI agents, enabling more reliable and faster deployments.

- Observability and Traceability: Tools like MCP Inspector log every step of the request–response cycle, ensuring that actions are auditable and reproducible.

Evaluation Focus under MCP:

- Contextual Accuracy: Verifying the correct tool is invoked with accurate parameters.

- Replayability: Ensuring that every action can be traced and reproduced for debugging or audit purposes.

- Prompt/Response Quality: Assessing whether multi-step intentions generated by the agent are clear, logical, and executable.

A2A: Enabling True Multi-Agent Collaboration

While MCP standardizes external tool invocation by a single AI agent, the Agent-to-Agent (A2A) protocol introduces a framework that allows AI agents—potentially built on different underlying frameworks and platforms—to communicate and collaborate effectively.

Key features of A2A include:

- Inter-Agent Communication: Agents can exchange information seamlessly, regardless of their technological bases.

- Dynamic Task Delegation: Agents can assign and delegate tasks among themselves based on individual strengths and availability, optimizing overall performance.

- Shared Memory and Context: By facilitating shared state and memory, agents can coordinate their actions, which is essential for handling complex tasks that require collective decision-making.

Evaluation Focus under A2A:

- Task Delegation Quality: Evaluating whether sub-tasks are appropriately assigned to the most capable agent.

- Communication Efficiency: Measuring the effectiveness of inter-agent information exchange.

- Team-Level Outcomes: Assessing the collective performance concerning task success rates, latency, and resilience of the multi-agent system.

Comparative Analysis: MCP vs A2A

Cited from Open standards for connecting Agents.

| Aspect | MCP (Single Agent) | A2A (Multi-Agent System) |

|---|---|---|

| Goal | Structured and standardized invocation of external tools | Seamless communication and collaboration among heterogeneous agents |

| Architecture | MCP Client <–> MCP Server <–> External Tool | Peer-to-peer communication among agents, with shared resources/tools |

| Interoperability | Any application with an MCP server can be directly invoked | Enables agents built on different frameworks and vendor platforms to interact |

| Primary Benefit | Eliminates the need for custom integrations for every plugin | Facilitates collective decision-making through effective inter-agent communication |

| Evaluation Metrics | Tool correctness, context accuracy, replayability | Task delegation, communication efficiency, system-wide coordination |

| Emergent Behavior | Limited to individual LLM responses | New strategies and problem-solving behaviors emerging from collaboration |

Future Directions: Towards a Unified Multi-Agent Ecosystem

To fully leverage the potential of collaborative AI systems, future developments should focus on:

- Unified Evaluation Standards: Establishing shared semantic metrics to assess both individual agents and collective performance.

- Contextual Traceability: Developing comprehensive methods to track the “who, what, when, and why” of actions across distributed systems.

- Enhanced Benchmarks: Introducing advanced multi-agent benchmarks to rigorously test coordination, memory sharing, and dynamic delegation capabilities.

Conclusion

The evolution from MCP to A2A reflects a significant paradigm shift in AI agent capabilities:

- MCP ensures that AI agents can reliably and efficiently call external data and applications through a standardized protocol, simplifying integration and enhancing traceability. Google ADK supports MCP tools. Enabling wide range of MCP servers to be used with agents.

- A2A extends these benefits by enabling heterogeneous agents to collaborate, share context, and collectively tackle complex tasks, thereby unlocking a new level of collective intelligence. It is another open standard driven by community. There are some samples available using Google ADK, LangGraph, Crew AI etc.

Together, these protocols lay the foundation for next-generation AI systems, where both individual performance and group synergy are essential for tackling real-world problems.