Think in Intents, Not APIs: MCP Architecture Patterns for AI-Centric Automation

Table of Contents generated with DocToc

- Understanding the Relationship Between Capabilities, Products, and Components

- MCP Server Patterns

- Conclusion and Key Take Aways

Co-Author: Ruchi Mahindru - Distinguished Engineer - IT Automation Research, IBM Master Inventor

As AI agents mature from research demos to production grade orchestration platforms, a structured, modular approach to tool exposure and invocation becomes essential. The Model Context Protocol (MCP) introduces a unifying layer that abstracts product APIs into meaningful, callable actions for agents, copilots, and LLM-based workflows. However, a one-size-fits-all MCP server design does not scale across complex enterprise systems that span observability, optimization, compliance, and other domains.

To address this, we explore three architectural patterns:

- Capability-Level MCP Server for high-level business intent abstraction

- Product-Level MCP Server for wrapping stable product APIs

- Component-Level MCP Server for fine-grained, microservice-level automation

This layered approach enables cross product orchestration, simplifies agent development, supports modular evolution, and aligns well with team-based ownership in modern DevOps organizations.

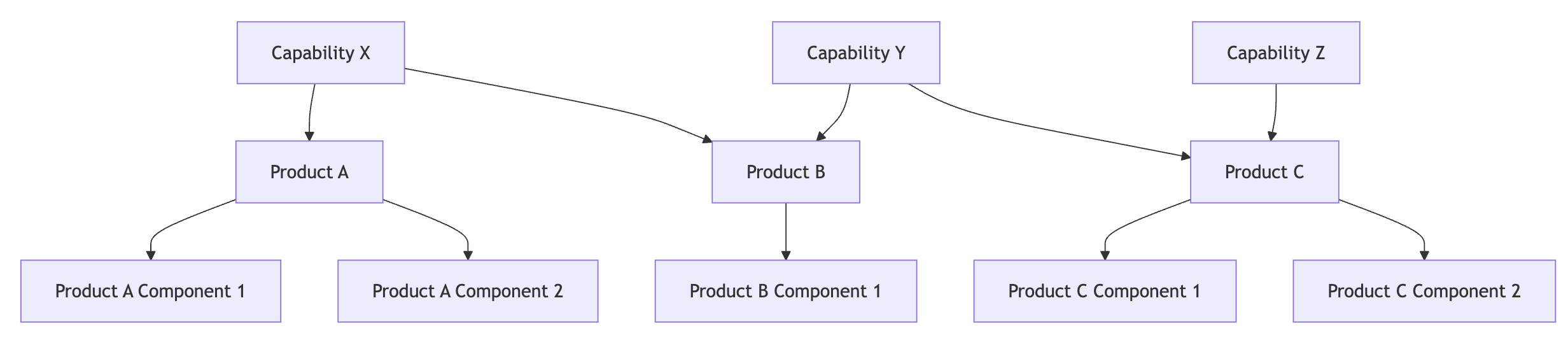

Understanding the Relationship Between Capabilities, Products, and Components

The diagram presents a three-tier architecture commonly used in enterprise software systems to enable modularity, integration, and AI orchestration. This structure is essential for building intelligent platforms that span multiple domains like observability, optimization, and compliance.

Capability Layer – What the user or agent wants to achieve

Capabilities represent high-level business goals or intents, abstracted from the underlying implementation. These are the actions that users, AI copilots, or workflow planners wish to perform.

Example Capabilities:

- Diagnose performance issues across infrastructure and services

- Automatically optimize resource allocation to reduce cost and latency

- Correlate anomalies with known compliance policies

- Forecast capacity needs and recommend scaling actions

- Generate health and risk reports for executive visibility

Key Point: A single capability may span multiple products. For instance, a capability that diagnoses performance degradation may use Instana to identify hotspots and Turbonomic to recommend actions.

Product Layer – What each platform provides as a packaged service

Products are independent enterprise systems that deliver distinct functional areas. Each product:

- Implements multiple capabilities

- Owns stable APIs

- Is maintained by a dedicated team

Products can interact with each other via federated workflows, especially in complex capabilities that require data correlation or multi-system orchestration.

Component Layer – How the product’s capabilities are implemented

Components are independent microservices or modules inside a product that perform specific technical tasks. These components handle granular functions like:

- Collecting metrics

- Generating forecasts

- Enforcing policies

- Performing health checks

They support separate deployment, testing, and monitoring.

MCP Server Patterns

Capability-Level MCP Server - “Goal-Oriented – abstracts business-level intents”

A Capability-Level MCP Server abstracts and exposes a specific business intent, such as “diagnose incidents,” “optimize performance,” or “generate compliance reports.” It provides a semantically meaningful, cross-product interface that aligns with what LLMs or users want to achieve, rather than how it is implemented.

Key Characteristics

- Intent-Centric Interface: Exposes tools named and designed around business actions (e.g., analyzePerformance, createForecast, generateAuditReport).

- Cross-Product Aggregation: May route to multiple underlying products (e.g., Instana for detection, Turbonomic for action).

- Stable Semantics, Flexible Backend: Backend tools can evolve or be replaced without changing the capability contract.

- LLM/Planner Friendly: Ideal for chatbots, AI agents, and automation orchestrators.

- Orchestration Gateway: Coordinates sub-calls across Product or Component Level MCP Servers.

- Optional Registry Publication: Published if capability abstraction is stable.

Best Practices

- Name tools after business verbs, not implementation details. E.g., planMigration instead of callProductX_API_Y.

- Implement smart routing, e.g., dispatch generateReport to Instana for metrics, Concert for policy compliance.

- Encapsulate backend details using workflows, fallback chains, or policy-driven logic.

- Use declarative inputs to let AI agents describe goals rather than implementation steps.

When to Use

- AI-Driven Automation:

- Your platform enables natural language interfaces or LLM copilots.

- You support multi-product orchestration where users care about outcomes, not tools.

- Unified Integration Scenarios:

- You need to unify workflows across observability, optimization, and compliance.

- Your users are non-developers, who require a simple, intent-driven interface.

Key Benefits of this Architectural Pattern

- Cross-Domain Orchestration: Allows orchestration logic to span multiple backend products (e.g., Instana, Turbonomic, Concert) without coupling to specific APIs or tools.

- Business-Driven Modularity: Capabilities represent business-aligned abstractions that remain stable even as products or implementations change behind the scenes.

- Faster Agent Development: Greatly simplifies AI agent logic, allowing them to call meaningful actions without low-level API knowledge.

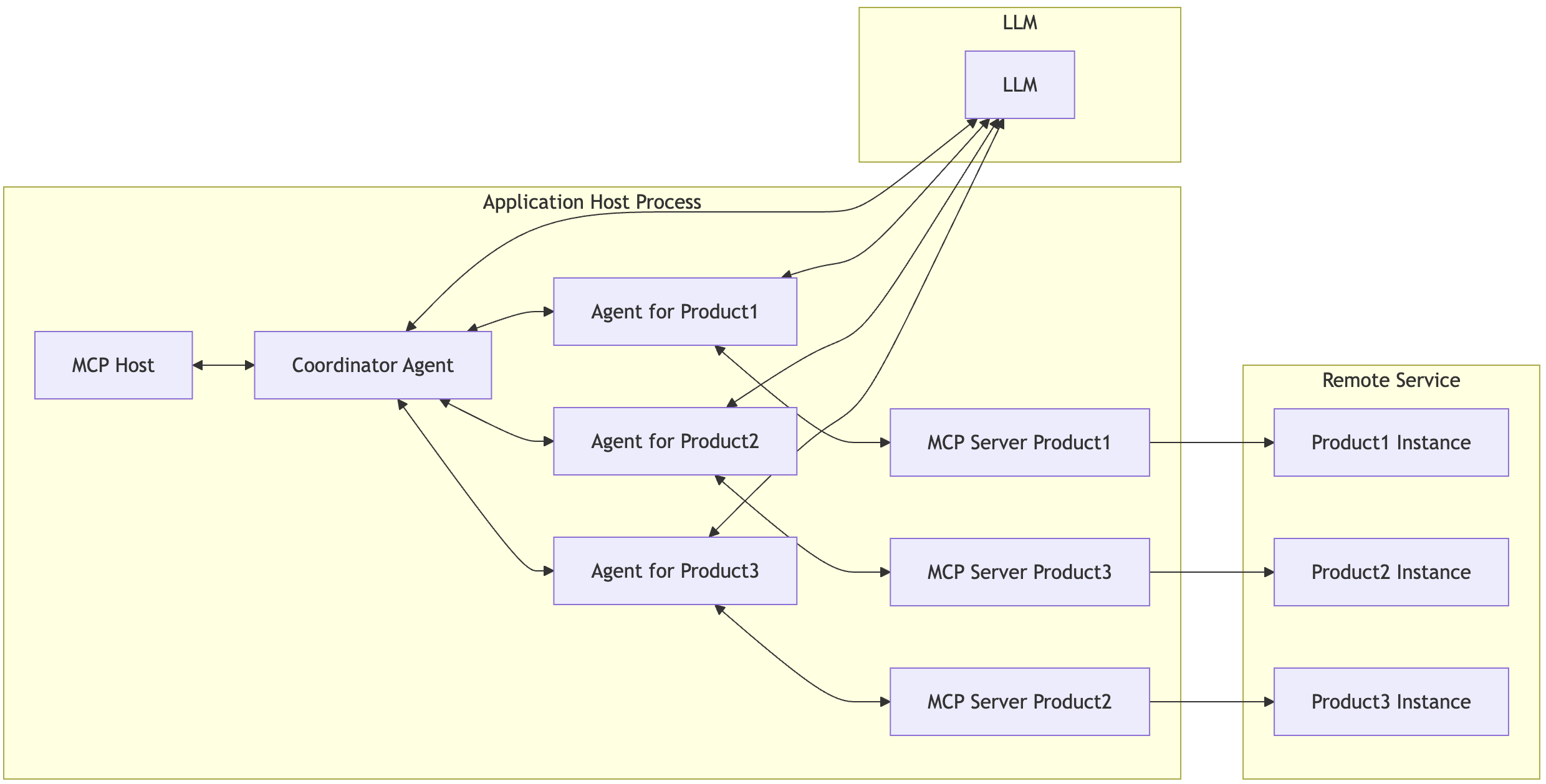

Product-Level MCP Server - “System-Oriented – wraps complete product APIs”

A single MCP server that wraps and exposes only the stable, supported, and public-facing APIs of an entire product.

The proposal here is very similar with ACP Advanced Multi-Agent as below:

Key Characteristics

- Scoped Tooling: Manages a curated set of tools and APIs for a specific product. For example:

- Instana MCP Server (observability platform)

- Turbo MCP Server (optimization platform)

- Concert Server (compliance platform)

- Stable Interface: Exposes only mature, backward-compatible APIs.

- External Integration: Designed for other products, partners, and customers to use.

- Registry Ready: Can be safely published to an MCP Registry.

- Security Focused:

- Implements authentication, authorization, and rate-limiting.

- Restricts data exposure (e.g. filters sensitive fields, role-based API Access)

- Autonomy: Product teams own and iterate independently of central AI or platform teams.

Best Practices

- Define a clear interface via OpenAPI specs, MCP tool schemas, and validation rules to enforce reliable tool exposure.

- Minimize tool scopes by exposing only what is essential to keep toolset lean and secure.

- Build with observability by integrating logging, metering, and tracing to monitor AI-driven tool usage.

- Encapsulate business logic to avoid leaking low-level implementation details.

When to Use

- Integration and Discoverability:

- Your product has well-defined and stable APIs.

- You need to enable external LLMs or third-party systems to integrate easily with your product.

- You want your product discoverable via MCP Registries for AI agents and orchestration layers.

- You support chatbot interfaces, natural language automation, or integration platforms that rely on dynamic tool discovery.

- Security and Governance:

- You require fine-grained control over which APIs or data fields are exposed, especially to protect sensitive or personal information.

- You want centralized governance and lifecycle management for the APIs

3) Team Autonomy and Deployment Flexibility:

- Your product team operates independently and needs to integrate with AI Agents without replying on central platform team’s updates or roadmap.

- Your deployment model includes BYOC, on-prem, or hybrid environments that demand close proximity of the AI runtime to the product.

- You support customer-specific configurations, where different clients require different sets of tool, permissions, and workflows.

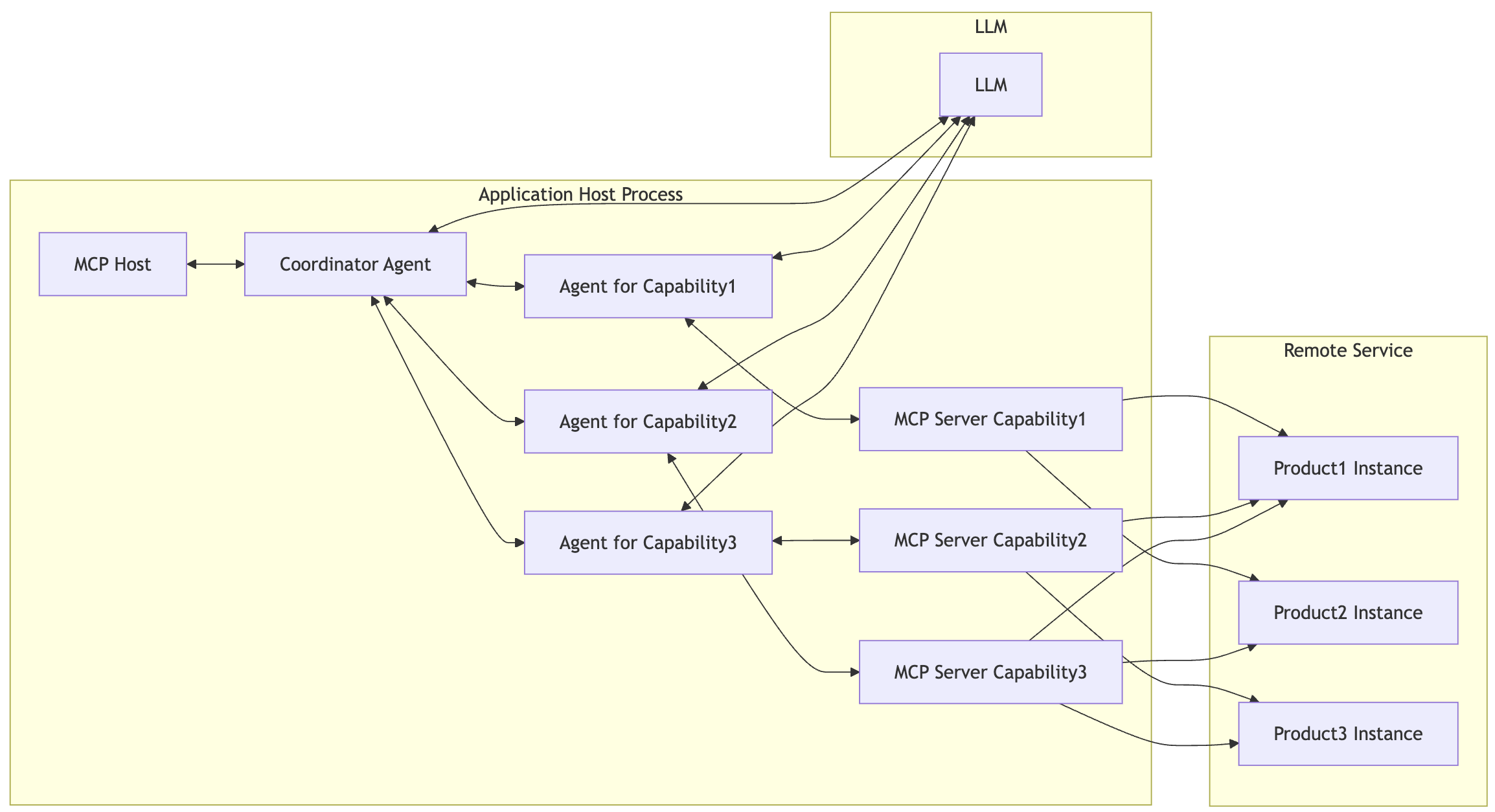

If you request some data correlation or federated API calls in sequence across different products, you may want to have a dedicate agent for each MCP server for the product as below.

The architecture below enables intelligent orchestration and execution of federated API calls and data correlation workflows across multiple backend enterprise products by leveraging a modular MCP-based design.

At the heart of this architecture is the concept of agent delegation, each backend product (e.g., Instana, Turbonomic, AIOps) is wrapped with a dedicated agent and served via a corresponding MCP server, allowing product specific logic to be encapsulated while adhering to a standard protocol interface.

Key Benefits of this Architectural Pattern

- Scalability: Each product agent and its corresponding MCP server can be scaled independently, making it ideal for high-throughput enterprise-grade workloads.

- Isolation and Fault Tolerance: Integration failures in one product do not affect others. Each agent can implement retries, fallbacks, or circuit breakers without affecting the broader system.

- Observability and Tracing: With dedicated logging and telemetry per agent/server pair, teams gain fine-grained visibility. Thereby, making it easier to debug, monitor, and optimize AI-driven workflows.

- Plug-and-Play Modularity: New products or services can be integrated by simply deploying a new agent and MCP server pair. Therefore, no changes required to existing agents or orchestration logic.

- LLM-Aware Orchestration: Enables LLMs to reason across tools and compose semantically rich, multi-step workflows. Thereby, improving accuracy, adaptability, and intent resolution.

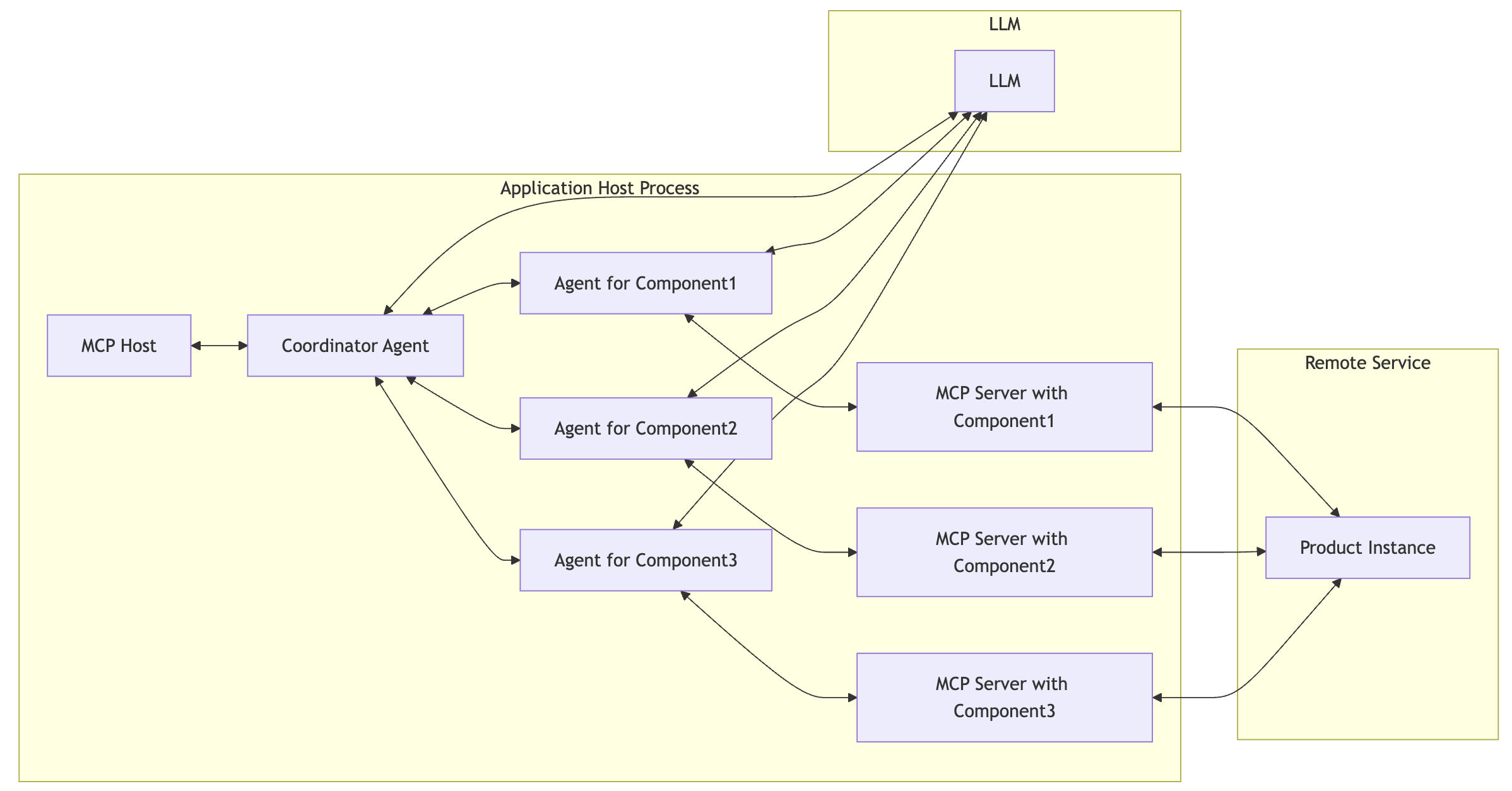

Component-Level MCP Server - “Function-Oriented – exposes individual service functionality”

A Component-Level MCP Server wraps and exposes the internal APIs of a single product component or microservice. It is ideal for fine-grained automation, internal AI agents, or when capabilities are distributed across modular components.

Key Characteristics

- Microservice Focused: Tied to one component (e.g., Metrics Engine, Alert Processor, Forecast Generator).

- Internal Interfaces Only: Typically not published externally.

- Rapidly Evolvable: Component teams can iterate on APIs independently.

- Highly Granular: Each MCP Server may expose only a few tightly scoped tools.

- Lightweight Security: May use service mesh, JWTs, or internal service auth.

Best Practices

- Isolate Failure Domains: Allow retries, circuit breakers per component.

- Ensure Deep Observability: Allow debugging and internal tooling (logs, traces, metrics).

- Avoid Tool Overexposure: Keep tools narrowly defined and internal unless promoted to product-level.

- Encapsulate Domain Logic: Prevent leaking implementation details to callers.

When to Use

- Modular Architecture:

- Your product is composed of microservices or subsystems.

- Each team owns and evolves its own interfaces.

- Internal Multi-Agent Use Cases:

- You want fine-grained orchestration within a product.

- You are prototyping fast or testing new agent integrations.

- Platform Composition:

- You plan to compose stable product-level APIs from loosely coupled component servers.

Key Benefits of this Architectural Pattern

- Fine-Grained Modularity: Allows deep decomposition of product logic into individual tool surfaces, making internal components independently callable and testable.

- Agile Iteration: Each microservice or subsystem can evolve its automation surface rapidly without waiting on centralized product coordination.

- Internal Orchestration Support: Enables AI agents or developer tools to perform multi-step automation across tightly scoped components with low latency.

- Improved Debugging and Observability: Component-level granularity makes it easier to trace issues, measure usage, and fine-tune specific pieces of the system.

- Team Ownership Alignment: Fits well with DevOps and squad-based teams where each team owns a microservice and its automation contract.

- Safe Experimentation: Perfect for prototyping new tools, testing LLM interactions, or building internal AI copilots before surfacing capabilities at the product level.

Conclusion and Key Take Aways

Capability-Level MCP Server Architecture Pattern:

- Enable AI agents to think and act in terms of business outcomes, not low-level APIs.

- Provide stable, intent-driven interfaces that abstract complex systems, support cross-product orchestration, and adapt seamlessly as backend tools evolve, empowering copilots and planners to deliver meaningful automation with clarity, precision, and flexibility.

Product-Level MCP Server Architecture Pattern:

- Simplify and secure AI access to enterprise products by exposing only stable, governed APIs.

- Serve as registry-ready gateways that enable safe, scalable integration for LLMs and agents, while maintaining control, observability, and team autonomy. This approach balances ease of use with strict governance, ideal for hybrid environments, external partners, and chatbot interfaces.

Component-Level MCP Server Architecture Pattern:

- Expose microservice-level APIs to enable fast, granular, and testable AI automation.

- Designed for internal use, they support prototyping, fine-grained orchestration, and iterative development—giving teams agility and control without coupling to broader platform constraints.

- Ideal for DevOps squads building intelligent workflows from the ground up, one component at a time.